Gaming, Monetization, User Acquisition

Report: Chat Engagement Increases Lifetime Value of Players Twentyfold

Dec 22, 2018

Gaming, Monetization, User Acquisition

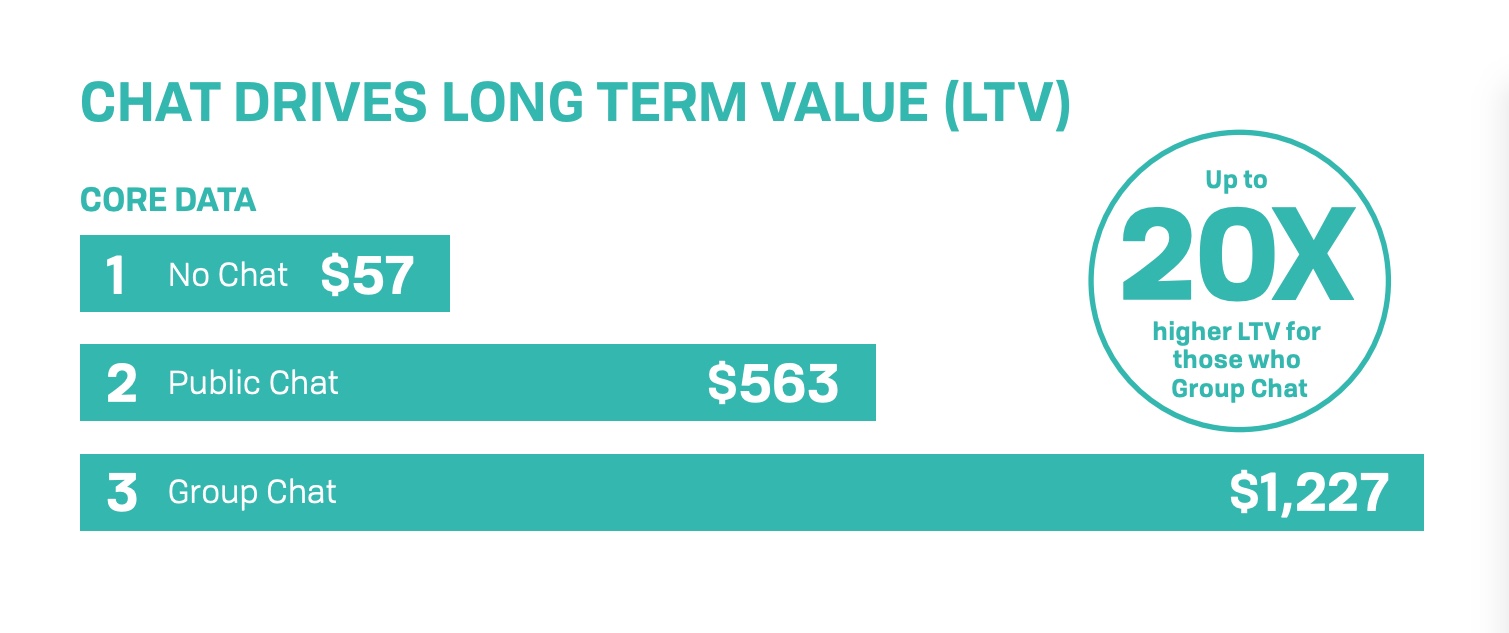

A recent research paper is opening eyes to the finding that daily participation in moderated chats increases a gamer’s lifetime value by up to 20 times. The report, published by Two Hat Security and available on VentureBeat, features data from the in-game community of a Top 10 mobile game in the Action RPG category. It also found that participation in the same mobile game’s public and private group chats increased the number of daily sessions 4x and average session length by more than 60%. While those figures are impressive, we’ve known for a while now that community plays a big role in getting players to come back.

More interesting is the assertion that chat moderation (allowing/disallowing, progressive user sanctions, etc.) acts to improve user experience, thereby promoting those longer, more frequent gaming sessions and ultimately higher LTV.

The smart conversation around moderation now is not how to filter-out antisocial behaviours, but how to scale chat experiences shared by your most valuable community players.

For many mobile game General Managers and Executive Producers, this will re-cast what is usually a sensitive and dull cost-oriented discussion about risk management and avoiding the perception of authoritarianism into an opportunistic business exchange. The current conversation around moderation is not how to filter out antisocial behaviours, but how to scale the chat experiences shared by your most committed and valuable community members.

In the mobile gaming world, motivating users to continually return to and complete the core loop is integral to maximizing LTV—that’s Game Development 101. What Two Hat’s study adds to the conversation is the clarity that whether or not someone engages in chats is an important factor in determining how often they come back to the core loop. Further, the mobile gamer’s chat experience, which is very much a factor of the game’s chat moderation policies and practices, has a direct impact on how often they will return to the core loop.

“Assist a gamer to make two new connections and engage in one good chat experience per day, and the odds of them making a near-term return visit jump exponentially.”

—Steve Parkis, CEO of Joyful Magic and former SVP of Games for Zynga

According to the study, the LTV of players who did not participate in community chats at all was about $57. The LTV of those who did participate in moderated public chats was $563 (about 10x), and the LTV of those who participated in private group chats was over $1,200, or more than 20x that of non-chatters.

There seem to be two main considerations for mobile game publishers who want to capitalize on the in-game chat opportunity. First, community chat and moderation is not something that can be bolted onto your game as an afterthought—the conversation needs to start at the design phase of your mobile game. This is because, over the last few years, the mobile game industry has realized that prioritizing the user’s experience with their game rather than the game product itself is what ultimately leads to success. Sniping bad guys from the cover of darkness is awesome, but talking a bit of trash about it is the part many players really enjoy.

Second—and this is key—there is no one-size-fits-all solution for chat moderation. You can’t treat an 8-year-old newbie in your mobile game community the same way you do a hardcore adult player. Talk of violent acts within Candy Crush’s mobile gamer community may be pretty concerning, but they are par for the course if you’re playing Call of Duty. This goes toward the idea that a single code of conduct won’t work for all mobile gaming communities—getting it right takes work.

Source: Two Hat Security

Beyond simply establishing policies of allowed and disallowed words, phrases, etc., game publishers need to plan for other potentially disruptive or dangerous social exchanges. Should your “fun” community be allowed to boil over with political exchanges during election season? What will you do if a mobile gamer starts discussing suicide? Game publishers can’t afford to hide behind the fear of censorship anymore—community members want and expect you to get involved and improve not just your game, but also their lives.

“Moderation is not seen anymore only as being there to enforce rules sometimes judged useless or unfair by users, but is appreciated for responsiveness, being there. It is not censorship anymore, it’s part of the overall positive experience.“

—Elise Lemaire, Director of Operations, Rovio

In other words, beyond simply blocking offensive language and banning those who break your terms of service, what is your plan to apply moderation to make your mobile game community not just “safer”, but also a more supportive, engaging, and enjoyable experience?

Quoting the Two Hat white paper: “Don’t just mute those who use bad words, pick out the positive things influential users chat about and see how they inspire others to engage and stick around. Discover what your most valuable, long-term users are chatting about and figure out how to surface those conversations for new and prospective users, sooner, and to greater effect.”

General Managers of top mobile gaming titles want to spend their time thinking in terms of creating opportunities, not managing risks. Inverting the conversation to frame chat moderation as a profit center waiting to be unleashed, rather than a cost center to be managed, is an excellent way to accomplish just that.